What are you trying to accomplish?

- Support a new language? - Support a testing approach

- Extend an existing one? Which one? - it’s a custom plugin . It’s Mutation Analysis

- Add language-agnostic features? - currently only for Java and Kotlin

- Something else? - no

What’s your specific coding challenge in developing your plugin?

How to make the custom metric available for New Code condition . I tried with new_ added to the metric name, which makes it available in New Code , but do not see any value against it in Measures page . I can see that all the metrics are calculated for PR analysis and other branches after the change was made .

Thanks

Hi @Bangar_Milind,

I’m not sure I understand the problem.

You mention:

So, I understand from this that it worked: your custom metric is correctly computed only on New Code. Is that right?

Then you mention:

So, is your question about why you cannot see your custom metric on the Measures page of your project? Or, is your question on why your custom metric is not showing up on the Quality Gates page (as I would assume from the title of this thread).

Thanks for the clarification.

Thanks Wouter for the response and apologoes for not responding earlier.

Essentially I want to show the “new_” metrics which I have added in the custom plugin on Measures page .

Use Case : Want to show the custom plugin metrics in PR builds on Sonarqube, similar to Code Coverage , New Lines Of Code etc.

Hope I was clear on your queries.

Thanks

Milind

My turn to apologize for replying late  .

.

OK, I think I understood. Please correct me if I’m wrong. Note this will only show on your PR if you somehow fail a quality gate condition.

Using our example plugin as a base, let’s imagine I add the following “new code” metric:

// I would add this to src/main/java/org/sonarsource/plugins/example/measures/ExampleMetrics.java

/* ... */

public static final Metric<Integer> NEW_FILENAME_SIZE = new Metric.Builder("new_filename_size", "New Filename Size", Metric.ValueType.INT)

.setDescription("Number of characters of file names")

.setDirection(Metric.DIRECTION_WORST)

.setQualitative(false)

.setDomain(CoreMetrics.DOMAIN_SIZE)

.setDeleteHistoricalData(true) // <-- Notice I'm setting this to true

.create();

/* ... */

I would then have some code to compute it. Example:

// Updating src/main/java/org/sonarsource/plugins/example/measures/SetSizeOnFilesSensor.java in this example.

public class SetSizeOnFilesSensor implements Sensor {

@Override

public void describe(SensorDescriptor descriptor) {

descriptor.name("Compute size of file names");

}

@Override

public void execute(SensorContext context) {

FileSystem fs = context.fileSystem();

// only "main" files, but not "tests"

Iterable<InputFile> files = fs.inputFiles(fs.predicates().all());

for (InputFile file : files) {

context.<Integer>newMeasure()

.forMetric(NEW_FILENAME_SIZE)

.on(file)

.withValue(file.filename().length())

.save();

}

}

}

When I deploy this to SonarQube (running a 10.2 version), I can now see this on the Measures page of a project (requires a new analysis, two if you have never analyzed the project before):

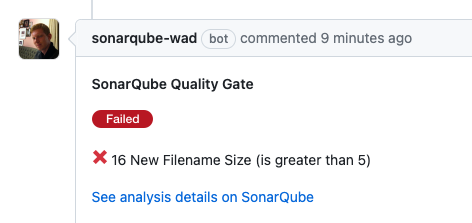

I can now also add a new condition on my quality gate for this (note that this isn’t something we recommend):

And, finally, if I analyze a pull request with this all, I can see the failing condition shows up on my PR:

Do these 2 code examples give you the missing pieces of the puzzle?

Thanks for the detailed explanation Wouter it really helps.

I will work on the metrics code now.

One doubt though on → Note this will only show on your PR if you somehow fail a quality gate condition . Is this applicable for any “new” metric ? What If I have not configured on Quality Gate , will it not work?

Thanks in advance.

Regards

Milind

No, the pull request decoration will only show measures for failing quality gate conditions. If everything is fine, it only shows the default measures that relate to clean code (issues and default ratings).

If you absolutely need to add these measures to your PRs, you may want to look at writing a custom PostProjectAnalysisTask task, which can be triggered after an analysis is completed by SonarQube. You could then use the API of the platform you’re opening PRs on to add your own decoration.

Thanks Admiraal for guidance. I will work on the suggestions and get back.