Hi SonarQube, we’re excited to launch our new plugin for SonarQube!

Since this is the first release of this plugin, please also add it to the Plugin Library page. Attached is the required information for joining the marketplace.

Description

The plugin adds OverOps event data as Issues and Metrics in Sonar.

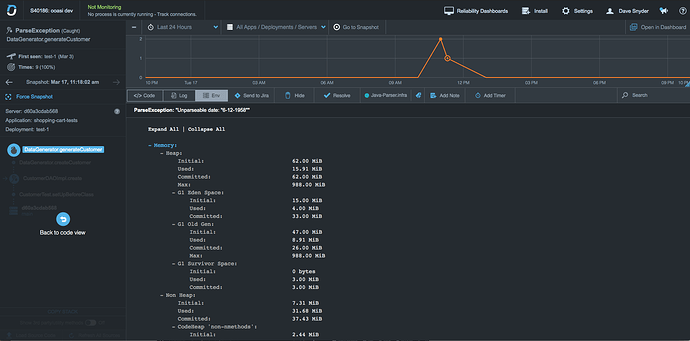

As a prerequisite, OverOps has to be attached to a JVM based application during the test phase in a CI pipeline to identify runtime errors resulting from poor code quality, including uncaught and swallowed exceptions.

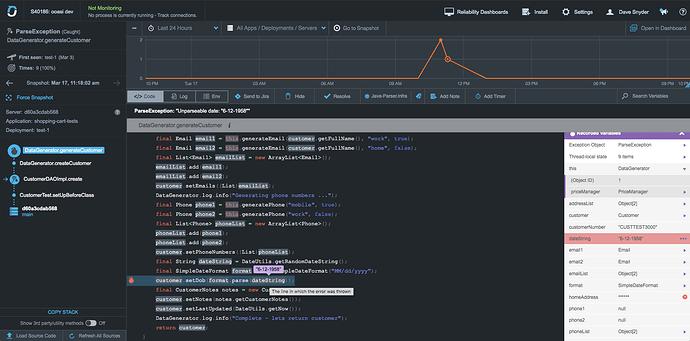

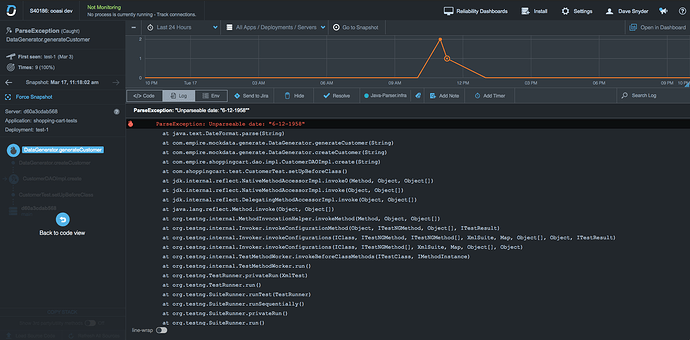

The OverOps plugin for SonarQube adds new Measures for Critical Errors, New Errors, Resurfaced Errors, and Unique Errors. These Measures can be used to create Quality Gates to prevent the promotion of low quality code. In addition, Issues are created for each event detected by OverOps, allowing the user to quickly identify the offending line. Comments are added after the SonarScanner has completed its analysis which link to the OverOps error analysis screen for further context including complete variable state for each event detected.

While our plugin is free and open source, our product offers a trial that leads up to a commercial license. We’re happy to provide credentials for a test environment directly to anyone who would like them, however we cannot post credentials in a public forum. Please email me directly to request access: dave.snyder@overops.com

Plugin homepage and documentation: https://github.com/takipi-field/sonar-plugin-overops

SonarCloud Dashboard: https://sonarcloud.io/dashboard?id=takipi-field_sonar-plugin-overops

SonarQube Compatibility: 7.9, latest

PR for sonar-update-center-properties: https://github.com/SonarSource/sonar-update-center-properties/pull/101

Please let us know if you have any questions!

) I think you could do better on the description, with a discussion of why I care about these exceptions that were caught by test cases (meaning they were expected and perhaps even what was being tested…?). Because right now this is what I see:

) I think you could do better on the description, with a discussion of why I care about these exceptions that were caught by test cases (meaning they were expected and perhaps even what was being tested…?). Because right now this is what I see: