Hi team,

we are planning to integrate sonarcube into Azure Devops pipelines in order to check against code written and executed in Azure Databricks notebooks. We managed to configure the initial setup. The problem is that imported functions from other notebooks are not identified as such. Other notebooks are called via %run magic command. All imported functions lead to a bug (saying function is not defined).

Is there any way to configure that via % run command imported functions from other notebooks are identified?

Any help is highly appreciated!

Hi,

Which kind of code are you using inside Databricks ? And which Sonar Scanner are you using to analyze that code ?

Thanks.

Mickaël

Hi,

we tried python Databricks notebooks and the python sonar scanner. The code smells worked pretty good.

The problem appeared when a function_x was defined in notebook_A but used in notebook_B via %run notebook_a first in notebook_b. In this case using function_x lead to a bug in notebook_B saying it was not defined (although defined imported via notebook_a).

Thanks!

Thanks.

One of my colleague will take over this thread, knowing much more than me with the python analyzer. Stay around !

Mickaël

Hi @MareikeZinram,

Thank you for using our Python analyzer.

Currently we don’t have anything specific for Databricks notebooks.

In order to better investigate the problem you mentioned, it would be very helpful if you could share the python code you’re analyzing.

Hi @Andrea_Guarino ,

thanks for your answer! As only pictures are accepted, I can’t upload the entire notebooks here. But here is very small example:

notebook 1 is called helper and has two lines only defining the function print_one()

def print_one():

print(1)

notebook 2 is called main and has also two command lines:

%run ./helper

print_one()

%run ./helper caches all functions defined in the helper notebook. In databricks we can not use ‘from helper import print_one’

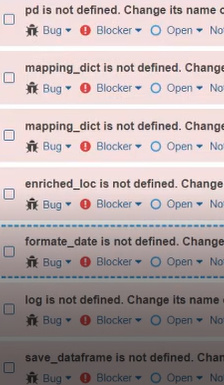

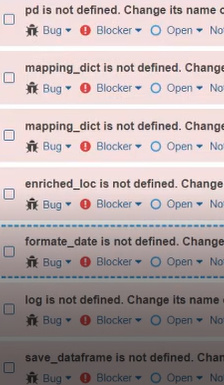

print_one() will show up as bug in sonarcloud saying the function is not defined.

Does that help? Again, many thanks!

@MareikeZinram Thank you for this example.

Just for additional context:

The %run command is one of databricks “magic commands”. Databricks’ documentation says that it is roughly equivalent to a python import statement.

Databricks magic commands are currently not supported by our python analyzer. I recommend to simply disable the corresponding rules from your Quality Profile.

Another solution would be to create a Databricks library, the documentation says that import works in this case.

Thanks @Nicolas_Harraudeau!

Yes, it is roughly equivalent to a python import statement. I guess will we try your first suggestion and will need to live with the fact that truly non-defined functions will not be detected by the automated code check.

Thanks